#BLOG3 Say hello to Google Cloud's Codey, Chirp, and Imagen!

Google’s Vertex AI is a generative AI tool with trained models that helps you synthesize your data in a better way. You can use its PaLM technology to generate your own AI application. Many tech companies use this feature for ease of access and to provide their own generated features to their users. Canva, a multi-national graphic design company uses this feature to generate stories for their users. Canva users can select a few images from their gallery and can just with a few clicks transform it into a beautiful-looking story. Many consulting companies such as Deloitte, BCG, Accenture, and different SaaS companies use this feature.

It also can be integrated with Enterprise Search: a software used to search for information inside a corporate organization. The technology identifies and enables the indexing, searching, and display of specific content to authorized users across the enterprise.

The enterprise search process occurs in three main phases:

Exploration - Here, the enterprise search engine software crawls all data sources, gathering information from across the organization and its internal and external data sources.

Indexing - After the data has been recovered, the enterprise search platform performs analysis and enrichment of the data by tracking relationships inside the data—and then storing the results so as to facilitate accurate, quick information retrieval.

Search - On the front end, employees request for information in their native languages. The enterprise search platform then offers answers—taking the form of content and pieces of content—that appear to be the most relevant to the query. The query response also factors in the employee’s work context. Different people may get different answers that relate to their work and search histories.

In addition to PaLM 2, Google has introduced new models in Vertex,

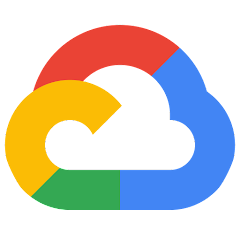

Imagen, which helps in image generation and editing using text prompts.

You can find its research paper here.

The input to Imagen Editor is a masked image and a text prompt, the output is an image with the unmasked areas untouched and the masked areas filled in. The edits are faithful to input text prompts, while consistent with input images as seen in the below image.

It is still in beta, but it is available to a limited number of users. To try Imagen, you can sign up for the waitlist on the Google AI website.

Codey, which generates code for you, completes the code for you, and converses with a bot that helps in debugging your code!

Codey is supported by various languages such as Java, Go, SQL, Python, Typescript, etc.

Chirp, which brings speech to text with over 100 languages.

It has been trained with over 2 billion parameter speech models. Chirp achieves 98% accuracy in English and a relative improvement of up to 300% in languages with less than 10 million speakers.

The above image shows how the Automated Speech Recognition(ASR) model works and is used by Google for its Chirp model.

The first stage trains a conformer backbone on a large unlabeled speech dataset, optimizing for the BEST-RQ(BERT-based Speech pre-Training with Random projection Quantizer is used to pre-train the encoder of the model) objective.

Continuing to train this speech representation learning model while optimizing for multiple objectives, the BEST-RQ objective on unlabeled speech, the modality matching, supervised ASR and duration modeling losses on paired speech and transcript data, and the text reconstruction objective with an RNN-T decoder on unlabeled text.

The third stage fine-tunes this pre-trained encoder on the ASR or AST(Automatic Speech Translation) tasks.

The link to the research paper by Google can be found here.

A quick read on Google I/O’s 2022 Immersive View feature which was a showstopper.

Google Maps new AI feature makes you feel you are right there even before you visit.

More Immersive, Intuitive Map: This feature combines Street View and aerial photos to create 3D representations of the route you are planning to take. It also gives detailed information about the weather, crowd level, and ambience of the place. At present, five cities are getting access to the immersive view: Los Angeles, San Francisco, New York, London, and Tokyo.

The motive of this feature is to allow users to plan their trip in detail before leaving their homes and get a look and feel of the place they are visiting.

You can even get a live 3D animated weather forecast using this feature. For e.g. “I want to know if it is going to rain in NYC at 11a.m”, and you can straight away head to Google Maps immersive view and check the forecast along with live AQI updates.

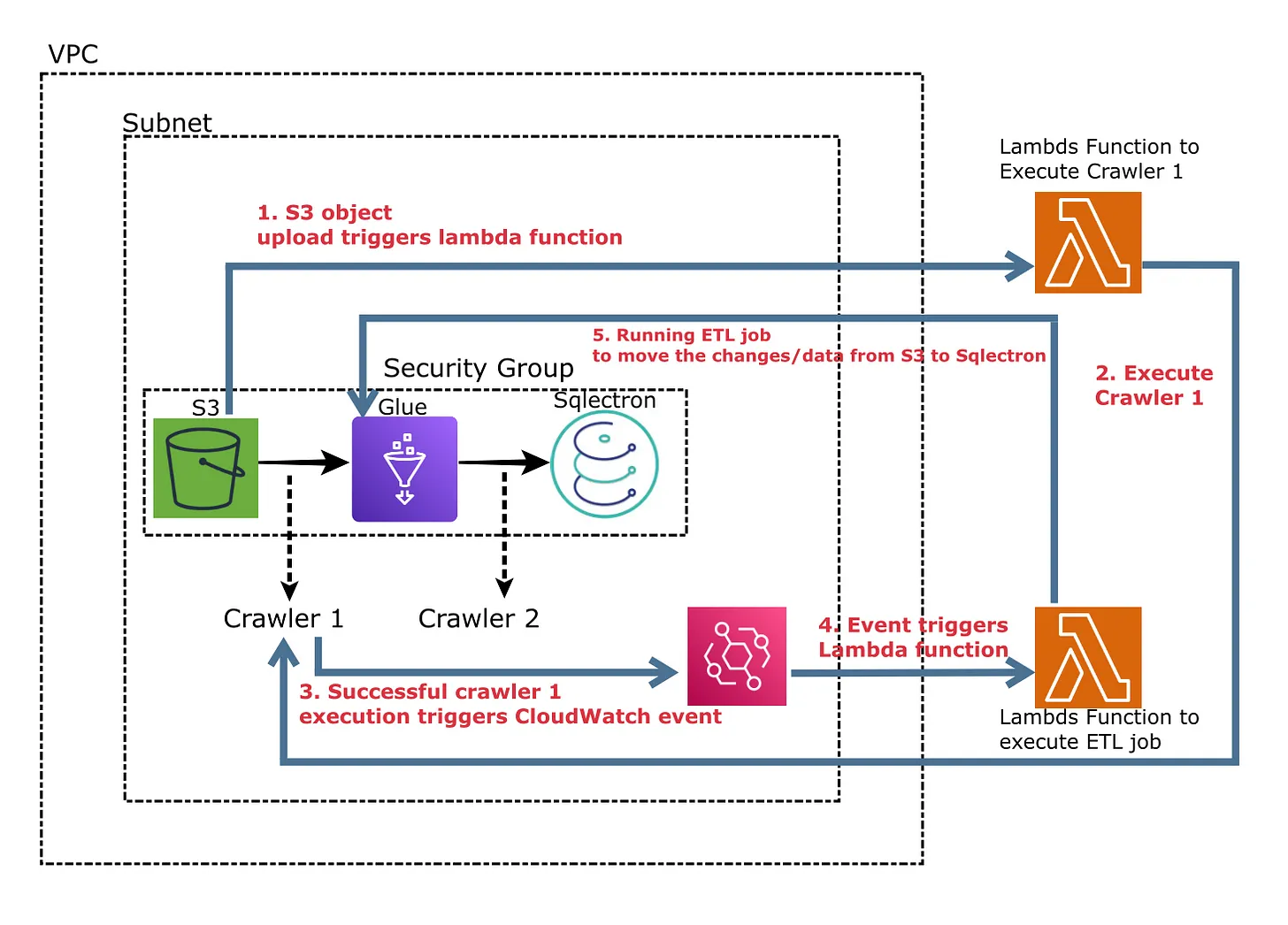

If you like this blog then do read our old blog project on AWS where we created a working ETL setup using AWS Glue x Lambda x Sqlectron x S3

Click on the below image to read it!

You can run an ad or news in our newsletters which gets you ahead by decluttering your product/article/project/innovation in tech and bringing your expected audience closer to you.